If you believe in the following, I am going to try to change your mind:

“Turing machines aren’t realistic. They need infinite memory so they can’t be implemented. Any real computing device is limited in its memory capacity and, therefore, equivalent to a finite state machine.”

This is a fairly commonly held view. I used to believe in it myself, but had always found it deeply unsatisfying. After all, modern-day computers have limited memory capacity but closely resemble Turing machines. And Turing’s abstract formulation arguably led to the digital revolution of the 20th century. How, then, can the Turing machine be a physically irrelevant mathematical abstraction? If all of our computers and devices are of the weaker class of computers, namely, finite state machines, why do they have to look so much like Turing machines?

It is important to clarify this, especially for neuroscience and computational biology. If we think of Turing-equivalence as this abstract level of computation that is impossible to physically achieve, then we block out classical insights from the theory of computation and cannot even begin to ask the right questions. (I recently wrote a manuscript asking the question “where is life’s Turing-equivalent computer?” and showed that a set of plausible molecular operations on RNA molecules is sufficient to achieve Turing-equivalent computation in biology).

It took a good amount of reading and thinking to finally understand the meaning of Turing-equivalence. I will explain what I believe to be the only consistent way of looking at this issue. Here is the short version:

When we say Turing machines require “unbounded memory”, what we mean is that memory cannot be bounded by the systems descriptor, not that it cannot be bounded by other things such as the laws of physics or resource constraints. Turing-equivalence only requires a system in which memory usage grows, not one in which memory is infinite.

Below I explain precisely what all that means. I will try to convince you that this is the only consistent way of looking at this and that Turing, himself, shared this perspective.

What are Turing Machines and Finite State Machines?

Let me first introduce three computation systems, from weakest to strongest: look-up tables, finite state machines, and Turing machines.

A look-up table is the simplest form of computation. It consists of a table with two columns: an input column and an output column. When given an input, you find the row with the matching string in the first column and output the string in the second column of that same row. Look-up tables have no memory and are only capable of solving problems with a finite sized input set; the set of possible inputs is limited and predetermined in the table’s descriptor. For example, there is a look-up table with several thousand rows that given a tic-tac-toe board returns the optimal winning move. However, there is no description of a look-up table that can compute the remainder of an arbitrarily large number when divided by 3. Try it! Give me a table that solves the remainder problem and I can tell you a number which it is missing from its input column.

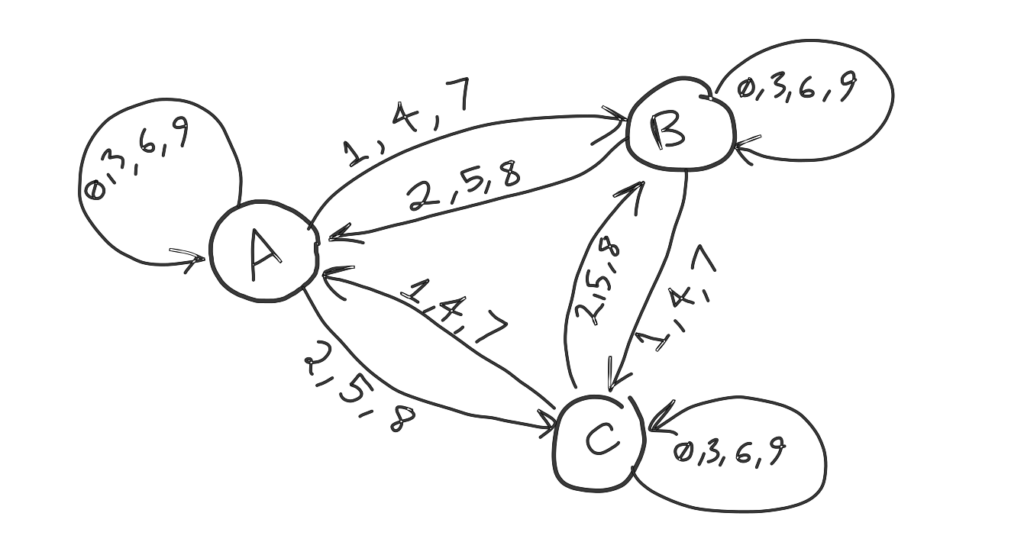

Finite state machines are a computation system one notch more powerful than look-up tables. They can deal with problems that have an infinitely large input set. The input is broken down into a string of symbols (or digits). Each symbol is fed sequentially into the machine. The machine has a number of predefined states and at every given moment it is in one of those states. The machine’s state determines how it will transition into a new state when fed a new symbol. For example, here is a graph representing a finite state machine with three states that computes a number’s remainder when divided by 3 (something a look-up tables couldn’t solve). A crucial feature of finite state machines is that (in contrast to look-up tables) the size of the input is not bounded by the descriptor.

However, there are some problems that a finite state machine cannot solve. For example, there is no descriptor of a finite state machine that can solve the following counting problem: given a string consisting of two letters X and Y (e.g. “YXXYX”) determine whether there were more Xs, or more Ys, or both letter occurred an equal number of times. Try it! Try constructing a finite state machine that counts the number of occurrences of each letter. Since you are forced to predetermine all the states in the machine’s description, it cannot count up to arbitrarily large numbers. So there will always be a string “XXYXYXXY…Y” that it will fail on.

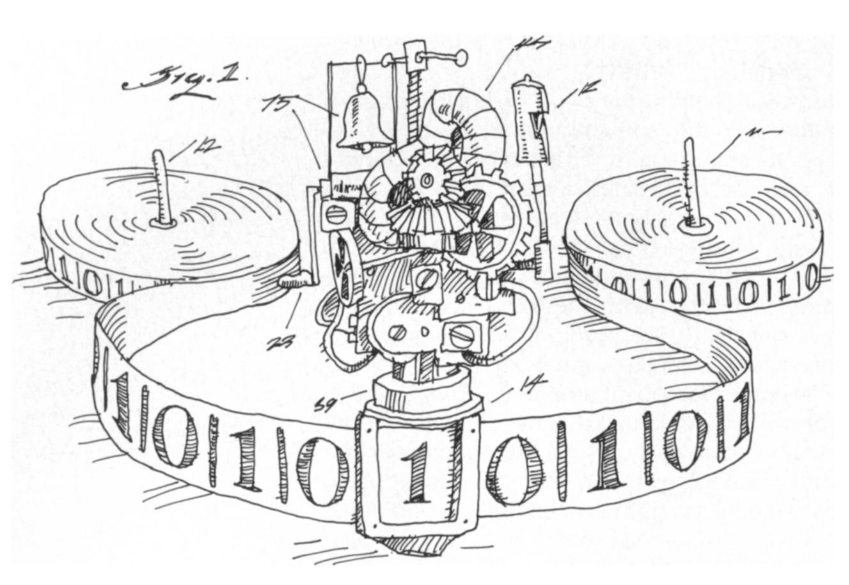

Finally, Turing machines are the most powerful computation system. A Turing machine is like a finite state machine except that it has access to an infinitely long bidirectional memory tape divided into squares where it can read from and write symbols on to. The tape head contains a finite state machine. At every moment in time it only has access to a single square of the tape and must make a decision about what to write and which direction to move (left or write) using only what it can immediately see. Remarkably, Turing machines can solve any computable problem and simulate any other computation system. For this reason, Turing machines are called a universal computation system.

A crucial feature of Turing machines is that (in contrast to finite state machines) the number of states is not bounded by the descriptor. This is because part of what determines a Turing machine’s state is the symbols written onto the tape. There is no way of knowing how many tape squares will be used before seeing the input. Another way to say this is that the system’s memory usage can grow as a function of input size. This is not the case for finite state machines, where all the possible states are predetermined in the machine’s descriptions. (This feature is not unique to Turing-equivalent computers; computationally weaker machines like push-down automata can also expand memory usage, allowing them to solve problems like the counting problem defined above).

Turing machines are as real as it gets

Firstly, it is unbounded memory, not infinite memory, that is necessary for Turing-equivalence. The infinite memory tape is only defined for convenience. At any given moment, a Turing machine is only using a finite number of squares. We can redefine Turing machines to have a finite memory tape that grows whenever the head reaches the end of the tape. (A Turing machine is also equivalent to a finite state machine with two stacks that can each grow arbitrarily large. That is another way of reformulating it to avoid an infinitely large memory tape).

“Alright“, one may say, “but Turing machines still require unbounded memory. There are physical limits on how much memory of a real physical device can use. So any real physical implementation of a Turing machine will be limited in its number of states, and therefore is equivalent to a finite state machine“.

The problem with this argument is that by the same logic, no real physical device can be a finite state machine either! Because finite state machines assume unbounded input size. (Remember, unbounded input size is what distinguishes them from look-up tables). Any real physical implementation of a finite state machine will inevitably have limits on its input size. (Because, the machine will eventually run out of energy and time). Why would unbounded memory capacity be any different from unbounded energy or time? So is every physically realistic computer equivalent to a look-up table then?

Finite state machines are no less of a mathematical abstraction than Turing machines are. And both are relevant to studying the real world. You can implement a finite state machine by creating something that recruits more energy when needed. Similarly, you can implement a Turing-equivalent machine by creating something that recruits more memory space [and energy] when needed. The fact that both of these will eventually hit some limit does not make them physically unrealizable.

A physical implementation of Turing machines (with practical constraints on its memory, time, and energy) is more powerful than a physical implementation of finite state machines (with practical constraints on its time and energy). This is because they both still have the same descriptor/input/output relationships as their abstract models. There exists a Turing machine descriptor that can solve the counting problem irrespective of what the physical constraints on memory, energy and time are.

On the other hand, there is no single descriptor of a finite state machine that can solve the counting problem. You may be able to construct one that can count up to some very large number, but the descriptor of your finite state machine will have to change if the maximum input size changes.

It is important to understand that constraints on memory space are not conceptually different from constraints on time or energy. To be consistent, Turing machines and finite state machines are either both realistic models, or neither one is.

Turing machines use finite means

Part of the confusion about Turing-equivalence may have stemmed from the title of Minsky’s book “Computation: Finite and Infinite Machines“. In it he categorizes machines into those that are finite (such as finite state machines) and those that are infinite (such as Turing machines). This categorization has been widely popularized while less attention is paid toward the book’s section on “why study infinite machines?”. Minsky explains that “instead of an infinite tape, we need only an inexhaustible tape factory” and that “this picture gives a reassuringly finite picture [of Turing machines]“. Minsky recognized that the study of Turing machines is the study of “growing automata“, rather than “genuinely infinite automata“. Unfortunately, the book’s title does not convey this nuanced perspective. (Personally, I don’t find the finite/infinite categorization to be helpful at all. Finite state machines, although finite in their memory capacity, are unbounded in their number of steps and energy consumption. So they are infinite in the same sense that Turing machines are infinite).

The notion of “effective calculability” or “computability” that the early mathematicians were concerned with was intimately tied to what is practically achievable through finite means. In fact, Turing explicitly mentions in his 1936 paper that “computable numbers are [defined to be] those whose decimals are calculable by finite means“. He precluded the existence of an infinitely large alphabet, only allowed a finite number of “states of mind” for the machine, and did not allow an infinite number of steps. Only as a matter of convenience did he endow his abstract machine with an infinite memory tape. (Although, to be precise, he didn’t call it an “infinite memory tape”; rather he simply did not impose any bounds on its size and recognized that some machines are programmed to write infinitely many symbols). It was clear to everyone at the time that an infinite memory tape does not violate the condition of “finite means” as it can easily be reformulated into a growing tape.

Modern day computers are Turing-equivalent because for every computable problem, there is a valid assembly code that solves that problem. You can run code that solves prime factorization or code that solves the travelling salesman problem. The fact that your computer may run out of memory or run out of batteries for a large input is not important, because you can take the same code and run it on another computer with more resources and it will produce the correct output. No such thing would be possible if your computer operated as a finite state machine. Because, for finite state machines, the limits on memory capacity are imposed by the descriptor, not by the physical resources.

Turing-equivalence is precisely what you get when you ask the question: what is the most powerful scope of computation that finite physical devices can achieve? To consider them as infinite or physically unrealistic is deeply mistaken and obscures the classical insights from the theory of computation.